Overview - Day 2

Getting Started

Day 2 of the software development lifecycle is all about deploying and managing the applications that have been developed. These activities are primarily the domain of SREs and Sys Admins but it is important that Developers understand and utilize these concepts and tools during their Day 1 activities to help support the application components through the life-cycle.

Day 2 Concepts/Tools Explained

Continuous Delivery

In IBM Garage Method, one of the Develop practices is continuous delivery. A preferred model for implementing continuous delivery is GitOps, where the desired state of the operational environment is defined in a source control repository (namely Git).

What is Continuous Delivery?

Continuous delivery is the DevOps approach of frequently making new versions of an application’s components available for deployment to a runtime environment. The process involves automation of the build and validation process and concludes with a new version of the application that is available for promotion to another environment.

Continuous delivery is closely related to continuous deployment. The distinction is:

- Continuous delivery deploys an application when a user manually triggers deployment

- Continuous deployment deploys an application automatically when it is ready

Typically, continuous deployment is an evolution of a continuous delivery process. An application is ready for deployment when it passes a set of tests that prove it doesn’t contain any significant problems. Once these tests have been automated in a way that reliably verifies the application components then the deployment can be automated. Additionally, for continuous delivery it important to employ other best practices around moving and managing changes in an environment: blue-green deployments, shadow deployments, and feature toggles to name a few. Until these practices are in place and verified, it is best to stick with continuous delivery.

As with most cloud-native practices, the move from continuous deployment to continuous delivery would not be done in a “big bang” but incrementally and as different application components are ready.

CI/CD integration

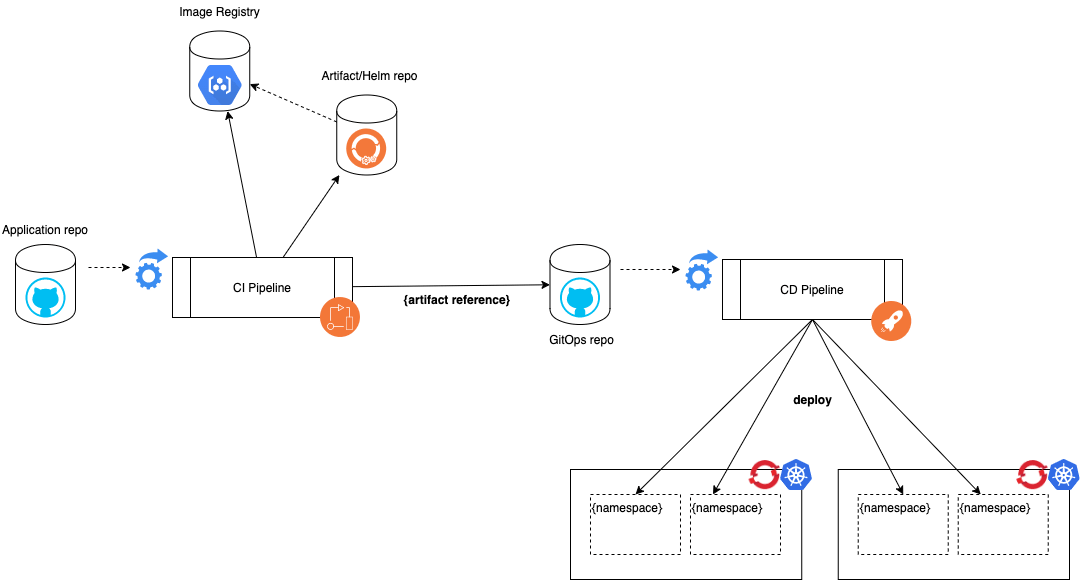

For the full end-to-end build and delivery process, both the CI and CD pipelines are used. When working in a containerized environment such as Kubernetes or Red Hat OpenShift, the responsibilities between the two processes are clearly defined:

- The CI pipeline is responsible for building validating and packaging the “raw materials” (source code, deployment configuration, etc) into versioned, deployable artifacts (container images, helm charts, published artifacts, etc)

- The CD pipeline is responsible for applying the deployable artifacts into a particular target environment

A change made to one of the source repositories triggers the CI process.

The CI process builds, validates, and packages those changes into deployable artifacts that are stored in the image registry and artifact repository(ies).

The last step of the CI process updates the GitOps repository with information about the updated artifacts.

At a minimum this step stores updates the version number to the newly released versions of the artifacts but depending on the environment this step might also update the deployment configuration.

Changes to the GitOps repository trigger the CD pipeline to run

In the CD pipeline, the configuration describing the desired state as defined in the GitOps repository is reconciled with the actual state of the environment and resources are created, updated, or destroyed as appropriate.

Tools to support Continuous Delivery

The practice of (CD) can be accomplished in different ways and with different tools. It is possible and certainly valid to use the same tool for both CI and CD (e.g. Tekton or Jenkins) with caution you enforce a clear separation between the two processes. Typically, that would result in two distinct pipelines to respond to changes that happen within the two different Git repos - source repo and gitops repo.

Another class of tools is available that are particularly suited for Continuous Delivery and GitOps. The following is by no means an exhaustive list but it does provide some of the common tools used for CD in a cloud-native environment:

Secret Management

Deploying an application into containers involves both the application logic and the associated configuration. The application logic is packaged into a container image so that it can be deployed but in order to make the container image portable across different environments, the application configuration should be managed separately and applied to the application container image at deployment time.

Fortunately, container platforms lik Red Hat OpenShift and Kubernetes provides a mechanism to easily provide the configuration at deployment time: ConfigMaps and Secrets. Both ConfigMaps and Secrets work in the same way to represent information in key value pairs and allow that information to be attached to a running container in a number of different ways. Unlike ConfigMaps, Secrets are intended to hold sensitive information (like passwords) and have additional access control facilities to limit who can read and use that information.

With a GitOps approach to continuous delivery, the application container image and the associated configuration are represented in the Git repository together. When the desired state defined in Git is applied to an environment, the relevant Kubernetes resources like Deployments, ConfigMaps, and Secrets are generated from the provided Git configuration.

A common issue when doing GitOps is how to handle sensitive information that should not be stored in the Git repository (e.g. passwords, keys, etc). There are two different approaches to how to handle this issue:

- Inject the values from another source into kubernetes Secret(s) at deployment time

- Inject the values from another source in the pod at startup time via an InitContainer

The “other source” in this case would be a key management system that centralizes the storage and management of sensitive information. There are a number of key management systems available to manage the secret values:

- Key Protect

- Hyper Protect

- Hashicorp Vault

Use the key management system at deployment time

CD with ArgoCD covers how to use ArgoCD to do GitOps, including how to manage sensitive information in a key management system.

Use the key management system at pod startup time

Coming soon

Monitoring

In IBM Garage Method, one of the Operate practices is to automate application monitoring. Sysdig automates application monitoring, enabling an operator to view stats and collect metrics about a Kubernetes cluster and its deployments. The environment includes an IBM Cloud Monitoring with Sysdig service instance configured with a Sysdig agent installed in the environment’s cluster. Simply by deploying your application into the environment, Sysdig monitors it, just open the Sysdig web UI from the IBM Cloud dashboard to browse your application’s status.

Log Management

In IBM Garage Method, one of the Operate practices is to automate application monitoring, including logging. Imagine your application isn’t working right in production even though the environment is fine. What information would you want in your logs to help you figure out what’s wrong with your application? Build logging messages for that information into your application.

Given that your application is logging, as are lots of other applications and services in your cloud environment, these logs need to be managed and made accessible. LogDNA adds log management capabilities to a Kubernetes cluster and its deployments. The environment includes an IBM Log Analysis with LogDNA service instance configured with a LogDNA agent installed in the environment’s cluster. Simply by deploying your application into the environment, LogDNA collects the logs, just open the LogDNA web UI from the IBM Cloud dashboard to browse your application’s logs.