Overview - Day 1

Getting Started

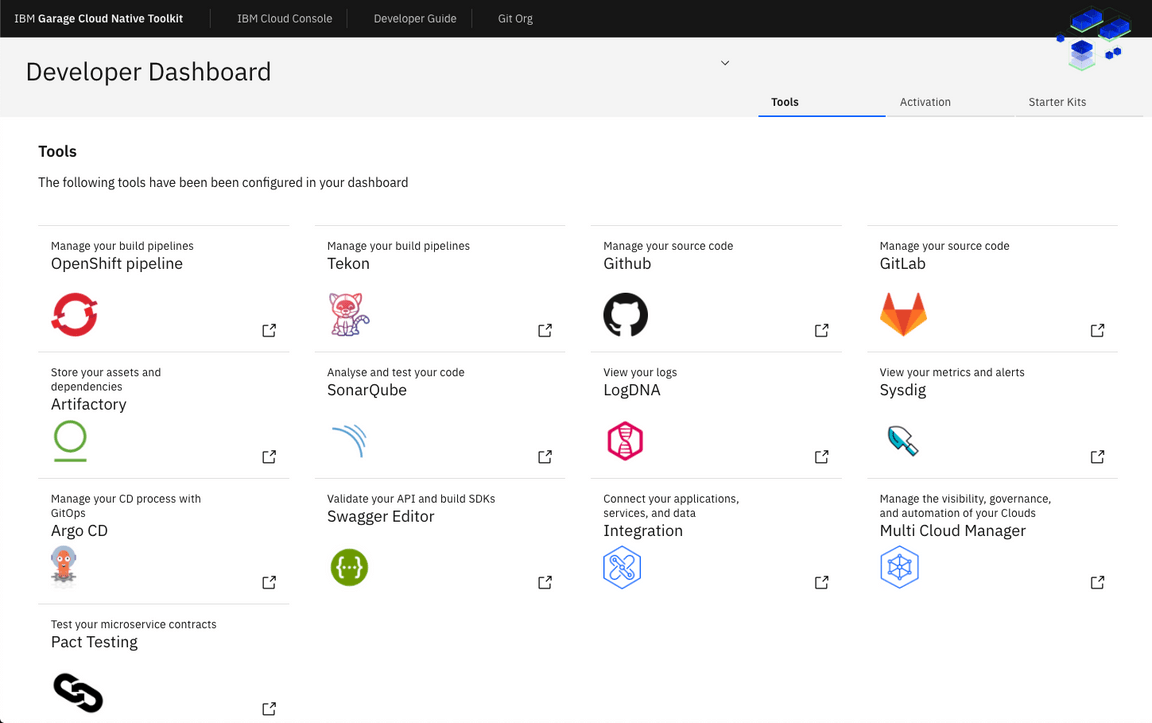

If you already have access to a development cluster that has been set up with the Toolkit you can get started deploying an application to the cluster with an Enterprise-grade devops pipeline. While you are there, you can also explore the Cloud-Native Toolkit Developer Dashboard and/or the OpenShift console to learn how to leverage those resources to increase productivity building cloud-native applications.

Day 1 Concepts/Tools Explained

Artifact Management

In the DevOps process, Artifact Management generally refers to the activities around storing and managing assets that are produced during the continuous integration process. Depending upon the development language, an “asset” could be any number of things:

- Library jar file

- NPM package

- Helm chart

- etc

Besides the contents of the asset, many of those types of artifacts also involve a particular protocol for how they are shared and consumed (e.g. maven repository for jar libraries).

There are a number of tools available to handle manage artifacts, from roll-your-own file systems to enterprise-grade software. Currently the Cloud-Native Toolkit supports provisioning the following Artifact Management tools:

- Artifactory

- Nexus

Code Analysis

In IBM Garage Method, one of the Develop practices is to automate tests for continuous delivery, in part by using static source code analysis tools. SonarQube automates performing static code analysis and enables it to be added to a continuous integration pipeline. The environment’s CI pipeline (Jenkins, Tekton, etc.) includes a SonarQube stage. Simply by building your app using the pipeline, your code gets analyzed, just open the SonarQube UI to browse the findings.

What is Code Analysis?

Static code analysis (a.k.a. code analysis) is a method of debugging by performing automated evaluation of code without executing the program. The analysis is structured as a set of coding rules that evaluate the code’s quality. Analysis can be performed on source code or compiled code. The analyzer must support the programming language the code is written in so that it can parse the code like a compiler or simulate its execution.

Static code analysis differs from dynamic analysis, which observes and evaluates a running program. Dynamic analysis requires test inputs and can meansure user functionality as well as runtime qualities like execution time and resource consumption. A code review is static code analysis performed by a human.

Static code analysis can evaluate several different aspects of code quality, such as:

- Reliability

- Bug: Programming error that breaks functionality

- Security

- Vulnerability: A point in a program that can be attacked

- Hotspot: Code that uses a security-sensitive API

- Maintainability

- Coding standards: Practices that increase the human readability and undestandability of code

- Code smell: Code that is confusing and difficult to maintain

- Technical debt: Estimated time required to fix all maintainability issues

- Complexity

- Code complexity: Code’s control flow and number of paths through the code

- Duplications

- Duplicated code: The same code sequence appearing more than once in the same program

- Manageability

- Testability: How easily tests can be developed and used to show the program meets requirements

- Portability: How easily the program can be reused in different environments

- Reusability: The program’s modularity, loose coupling, and limited interdependencies

Static code analysis collects several metrics that measure code quality:

- Issues

- Type: Bug, Vunerability, Code Smell

- Sevarity

- Blocker: Bug with a high probability to impact the behavior of the application in production

- Critical: Bug with a low probability to impact the behavior of the application in production, or a security vulnerability

- Major: Code smell with high impact to developer productivity

- Minor: Code smell with slight impact to developer productivity

- Info: Neither a bug nor a code smell, just a finding

- Size

- Classes: Number of class definitions (concrete, abstract, nested, interfaces, enums, annotations)

- Lines of code: Linespace seperated text that is not whitespace or comments

- Comment lines: Linespace seperated text containing significant commentary or commented-out code

- Coverage

- Test coverage: Code that was executed by a test suite

A Quality gate defines a policy that assesses pass/fail whether or not the number of issues and their severity is acceptable.

Continuous Integration

In IBM Garage Method, one of the Develop practices is continuous integration. The environment uses a Jenkins pipeline to automate continuous integration.

What is Continuous Integration?

Continuous integration is a software development technique where software is built regularly by a team in an automated fashion. This quote helps explain it:

– Martin FowlerContinuous Integration is a software development practice where members of a team integrate their work frequently, usually each person integrates at least daily - leading to multiple integrations per day. Each integration is verified by an automated build (including test) to detect integration errors as quickly as possible. Many teams find that this approach leads to significantly reduced integration problems and allows a team to develop cohesive software more rapidly

Image Registry

An Image Registry is a repository of versioned container images. It is perhaps a subset of the larger Artifact Management topic but has special considerations.

A specific protocol has been defined around building, pushing, tagging and pulling container images to and from an Image Repository. Typically,

the continuous integration process is responsible for verifying and building the application

source into an image and pushing it into the registry. At deployment time, the deployment descriptor (e.g. kubernetes resource definition) references

the image at its location within the image registry and the container platform pulls the image and manages the running image in the cluster. Tools

like skopeo can also be used within the process to copy images from one registry to another.

There are a number of options available for the Image Registry, both running in-cluster and outside of the cluster. Red Hat OpenShift even provides an image registry as part of the platform. While an intermediate image registry might be used during the CI process, in an enterprise environment it is ideal to have a centrally managed image registry from which vulnerability scans, certifications, and backups can be performed. Some of the available options include:

- IBM Cloud Image Registry

- Artifactory

- Nexus

- Red Hat OpenShift image streams

Contract Testing

In IBM Garage Method, one of the Develop practices is contract-driven testing. Pact automates contract testing and enables it to be added to a continuous integration pipeline. The environment’s CI pipeline (Jenkins, Tekton, etc.) includes a Pact stage. Simply by building your app using the CI pipeline, your code’s contract gets tested, just open the Pact UI to browse the results.

Contract testing is a testing discipline that ensures two applications (a consumer and a provider) have a shared understanding of the interactions or the contract between them.